In our previous post, we explored how to send text to an LLM and receive a text response in return. That is useful for chatbots, but often we need to integrate LLMs with other systems. We may want the model to query a database, call an external API, or perform calculations.

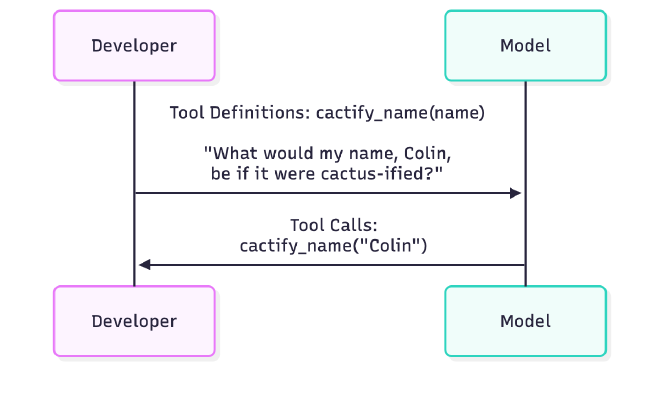

Function calling (also known as tool calling) allows you to define functions (with schemas) that the model can call as part of its response. For us, this seems like a first step towards enabling more complex interactions with LLMs, where they can not only generate text but also perform actions based on that text.

As with the previous posts, the code for this walkthrough is available on GitHub.

Defining a function schema

To start, we need to define a function that the model can use. For this example, we’ll use a silly Python function that “cactifies” a name:

def cactify_name(name: str) -> str:

"""

Makes a name more cactus-like by adding or replacing the end

with 'ctus'.

Args:

name: The name to be cactified.

Returns:

The cactified version of the name.

"""

base_name = name

# Rule 1: If the name ends in 's' or 'x', remove it.

# Example: "James" -> "Jame", "Alex" -> "Ale"

if base_name.lower().endswith(("s", "x")):

base_name = base_name[:-1]

# Rule 2: If the name now ends in a vowel, remove it.

# Example: "Jame" -> "Jam", "Mike" -> "Mik", "Anna" -> "Ann"

if base_name and base_name.lower()[-1] in "aeiou":

base_name = base_name[:-1]

# Add the smoother suffix

return base_name + "actus"

We then need to tell the model about this tool by defining a schema in a format the API understands (JSON Schema format).

{

"name": "cactify_name",

"type": "function",

"description": "Transforms a name into a fun, cactus-themed version.",

"parameters": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "The name to be cactified."

}

},

"required": ["name"]

}

}

Using curl

Following the OpenAI function calling docs, we can pass a tools parameter to the API endpoint to define functions the model can call. Here we use curl to demonstrate the raw API request:

curl https://api.openai.com/v1/responses -s \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-5-nano",

"input": [

{"role": "user", "content": "What would my name, Colin, be if it were cactus-ified?"}

],

"tools": [

{

"name": "cactify_name",

"type": "function",

"description": "Transforms a name into a fun, cactus-themed version.",

"parameters": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "The name to be made cactus-like."

}

},

"required": ["name"]

}

}

]

}'

This returns a response that includes a function_call object:

[

{

"id": "rs_0f6c0809e42d76950068c9d7930b748197a68348c3650098f5",

"type": "reasoning",

"summary": []

},

{

"id": "fc_0f6c0809e42d76950068c9d793d714819793a8a604b41f8a10",

"type": "function_call",

"status": "completed",

"arguments": "{\"name\":\"Colin\"}",

"call_id": "call_eckxIyxX7yoEpszYMLzCblmx",

"name": "cactify_name"

}

]

Reviewing the output, we see that the model decided to call our cactify_name function with the argument "Colin". The model itself doesn’t actually execute the function. It simply returns the function call in its response. It’s up to us to handle the function execution and return the result if needed.

It’s easier to see this in action using the Python SDK.

Using the Python SDK

Now let’s see how to do the same thing using the Python SDK. We’ll define the function schema as a variable:

cactify_name_schema = {

"name": "cactify_name",

"type": "function",

"description": "Transform a name into a fun, cactus-themed version.",

"parameters": {

"type": "object",

"properties": {"name": {"type": "string", "description": "The name to be cactified."}},

"required": ["name"],

},

}

Now, let’s ask the model to cactify a name using the function we defined:

import openai

client = openai.Client()

input_list = [{

"role": "user",

"content": "What would my name, Colin, be if it were cactus-ified?"

}]

tools = [cactify_name_schema]

response = client.responses.create(

model="gpt-5-nano",

input=input_list,

tools=tools,

)

If we inspect the response, we see that the model returned a function_call object indicating it wants to use the tool we defined:

ResponseFunctionToolCall(

arguments='{"name":"Colin"}',

name='cactify_name',

type='function_call',

# ...

)

Executing the function

Because the model only constructs the call, we have to execute the logic ourselves. We extract the arguments provided by the model and run them through our Python function:

import json

# Extract the arguments from the model's response

function_call = response.output[1]

args = json.loads(function_call.arguments)

# Run the function

result = cactify_name(**args)

print(f"Result: {result}")

Output:

Result: Colinactus

Completing the loop

To get a final natural language response, we need to feed the result of the function execution back to the model. This provides the LLM with the context it requested so it can answer the user’s original question.

We append the model’s original function call and the actual output of the function to our message history:

# Add the model's call to our function to the input list

input_list += response.output

# Append the function call output to the input list

input_list.append(

{

"type": "function_call_output",

"call_id": function_call.call_id,

"output": result

}

)

# Make a second call to the model

final_response = client.responses.create(

model="gpt-5-nano",

instructions="Tell the user what their name would be if it were cactus-ified.",

tools=tools,

input=input_list,

)

print(final_response.output[1].content[0].text)

The model uses the data we provided to generate the final answer:

Your cactus-ified name would be "Colinactus"! 🌵

There we go! We’ve successfully used function calling with the Python SDK. It’s a silly example, but it shows how function calling can be used.

Automating the workflow

In a real application, you likely wouldn’t handle these steps manually. You would want a loop that checks if the model is requesting a tool, executes it, and sends the data back until the model provides a final text response.

Here is a simplified example of what that loop might look like:

all_messages = []

def prompt(user_input: str) -> str:

"""Prompt the model with the user input."""

# Add the user input to the conversation history

all_messages.append({"role": "user", "content": user_input})

# Prompt the model with the user input

response = client.responses.create(

model="gpt-5-nano",

tools=tools,

input=all_messages,

)

for event in response.output:

all_messages.append(event)

# There's a request from the model to use a tool

if event.type == "function_call":

function_name = event.name

function_args = json.loads(event.arguments)

# Execute the function based on its name

if function_name == "cactify_name":

result = cactify_name(function_args["name"])

# Add the function call output to the all_messages list

# Use the exact format expected by the API

all_messages.append(

{

"type": "function_call_output",

"call_id": event.call_id,

"output": json.dumps(result),

}

)

# Now feed the function result back to the model

final_response = client.responses.create(

model="gpt-5-nano",

instructions="Respond with the what the name would be if it were cactus-ified in a sentence.",

tools=tools,

input=all_messages,

)

for final_event in final_response.output:

if final_event.type == "message":

text = final_event.content[0].text

all_messages.append({"role": "assistant", "content": text})

return text

for event in response.output:

if event.type == "message":

text = event.content[0].text

all_messages.append({"role": "assistant", "content": text})

return text

Now we can use this function to interact with the model and have it call our function as needed:

print(prompt("What would my name, Colin, be if it were cactus-ified?"))

# Output: Colin would be Colinactus.

print(prompt("What about Simon?"))

# Output: Simon would be Simonactus.

print(prompt("What names did I ask about"))

# Output: You asked about Colin and Simon.

Conclusion

Hopefully, this clarifies how function calling works under the hood. While this example was small, the pattern stays the same whether you’re cactifying names or querying a production database. We’re looking forward to applying this to more practical use cases in the rest of the series.

Next up, we’ll explore function calling with Ollama.